As a Senior Data Engineer with 9.5 years' of experience, I focus on building high-performance data processing solutions using Apache Spark, Scala, PySpark, SQL and AWS services. I thrive in designing robust ETL workflows, optimizing cloud-based data platforms, and ensuring efficient data governance. Always eager to collaborate on cutting-edge data solutions!

Skills & Expertise

🏗️ Big Data Technologies

- Apache Spark

- Kafka

- Hadoop

- Hive

- Databricks

☁️ Cloud Technologies

- AWS (S3, EMR, Lambda, Glue, Athena)

- Azure

🔧 Data Pipeline Automation

- Apache Airflow

- Rundeck

- Oozie

💻 Programming Languages

- Scala

- PySpark

- Python

- SQL

🏥 Domain Expertise

- Healthcare

- Retail

🚀 DevOps & CI/CD

- Git

- Jenkins

- Terraform

- Ansible

- Rundeck

Professional Experience

International Software Systems, Inc. | Oct 2023 – Present

Senior Prescription Drug Assistance Program

Built metrics and report generation scripts using PySpark for seamless integration into ETL pipelines.

Innova Solutions | Aug 2019 – Oct 2023

Reconciliation Report

Design and Rewrite scripts for reconciliation report generation from old-style Shell Script, Scala and Python combo to PySpark and extend the same for generic implementations, which are used for billing purposes.

Create Cloudwatch dashboards and alarms for alert creation.

Consistent Patient Store (CPS) Analytics

Onboarding new tenants to transmit data from the Source to Analytics platform so that provider-specific dashboards can be embedded in the Source/Tenant portal.

Interoperability

Designed and built a platform to support Interoperability data in health care, the pipelines are based on Lambda Architecture to handle both batch and Streaming processing.

Enhancement of Rundeck scheduler jobs for interoperability from existing scheduled times.

File Processing Status – Send the status of each file for success/failure to the customer.

Airflow Migration

Data pipeline orchestration migration from Rundeck scheduler (Shell Scripts) into Apache Airflow (Python Scripts).

COVID-19 Passport

Designed a spark hourly batch pipeline based on Lambda Architecture and efficiently amalgamated multiple data points to form a single view.

Referral Navigator Pipeline

The objective of the referral navigator pipeline is to ingest referral events, de-normalize nested JSON to tabular form and save it as parquet to run daily using Delta Lake.

Common well

Common well to be used for referential matching services. Job creation and integration with upstream and downstream.

Accolade

Accolade is a patient advocate/navigator whose principal customers are self-insured employers, whereas Accolade must currently assist their member community, it would be hugely beneficial to be able to reach out to an Accolade member before the episode of care occurs. Thus Accolade is looking to receive notification whenever one of their members has an eligibility request/response sent through a CHC Clearing House to one of their self-insured payer customers.

Transaction Volume and Billing Report - Create a report of time metrics for each request and response for each eligibility transaction to be sent daily to the customer for the previous day's data.

Health App

Enhance configuration files of existing individual tables to integrate into a single configuration file to reduce maintenance and ci/cd process.

Enhance UDFs for new customer requirements to support different output formats

Data availability checks from the scheduler before creating an EMR cluster for running pipeline for Databases, s3 Paths and FTP.

Normalization using Smarty streets API for checking User Addresses of US Locations and tracking the success/failure state of each address as a separate table for easy analysis.

Data pipeline orchestration with Rundeck scheduler using Shell Scripting.

Tata Consultancy Services | Jan 2016 – July 2019

CNAD

Worked on Spark SQL to fetch data from HDFS and run in Spark.

Oozie Workflow design and orchestration for running Spark- Scala job flow and python scripts.

Worked on Report generation based on requirements using Spark/Scala, AWS and Airflow with python scripting.

Build automation and dependency management using Maven, and Gradle for packaging, testing, and deploying Spark and Java-based applications.

Pocket Piece

Created an automated email that provides reprocessed record information to the clients.

Created Tibco job setup to run the jobs as part of the orchestration framework.

BNAD

Serverless Infrastructure provisioning for EMR builds from a Local Machine to eliminate the usage of Ansible.

Worked on deploying Jenkins through Docker on an EC2 Instance.

Jenkins adoption for continual CI/CD process implementation.

Designed and orchestrated Airflow workflows for STAF Integration using Terraform and created the pipelines and DAGs with Python for Scripting.

Education

GITAM Deemed University

M.TECH (VLSI DESIGN) - 2013 - 2015

Gitam Institute of Technology, Gitam University, Visakhapatnam, India

Saveetha School of Engineering

BE (Electronics & Communication Engineering) - 2008 - 2012

Saveetha School of Engineering, Saveetha University, Chennai, India

Certifications

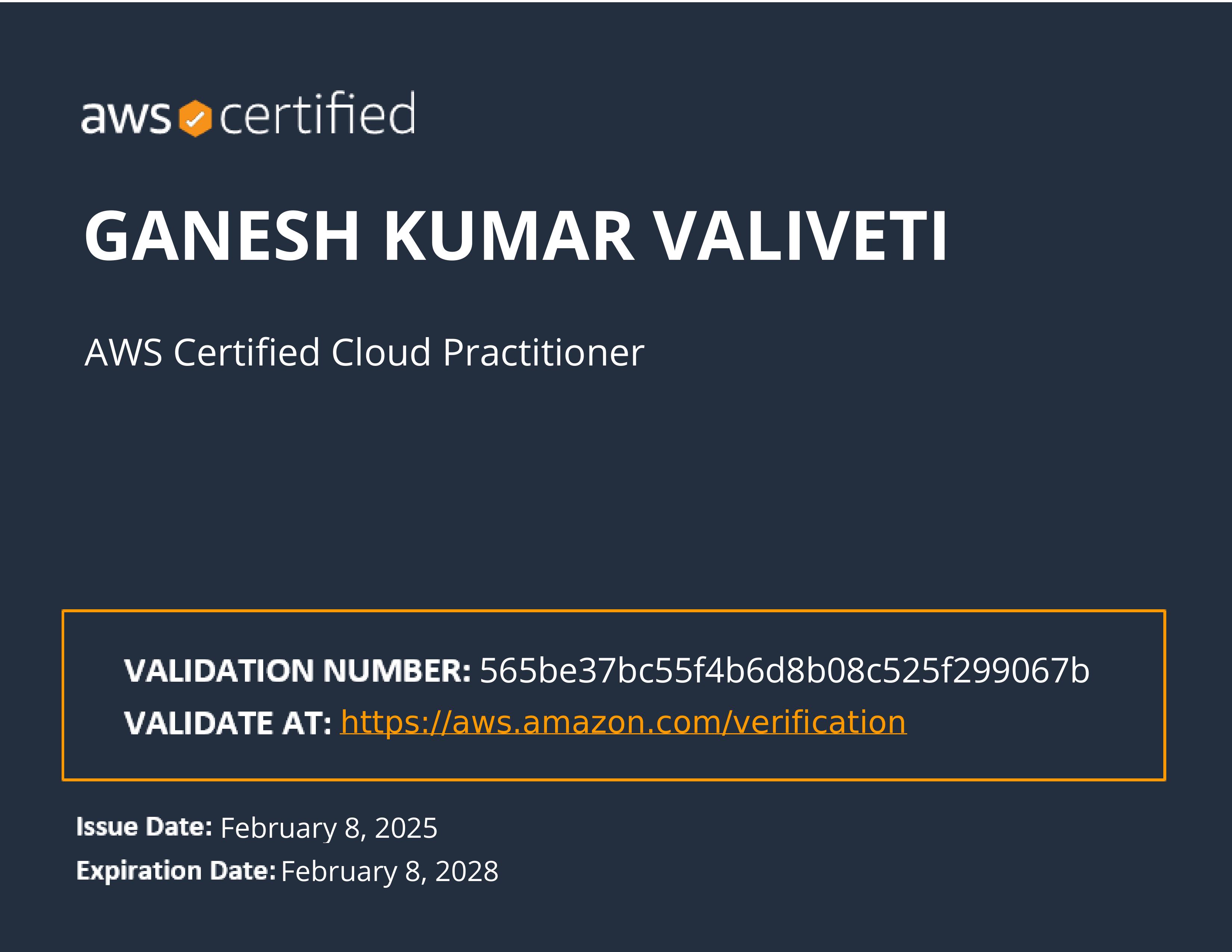

AWS Certified Cloud Practitioner

Verify

Microsoft Certified: Azure Fundamentals

Verify

Microsoft Certified: Azure Data Fundamentals

Verify

PCEP – Certified Entry-Level Python Programmer

Verify

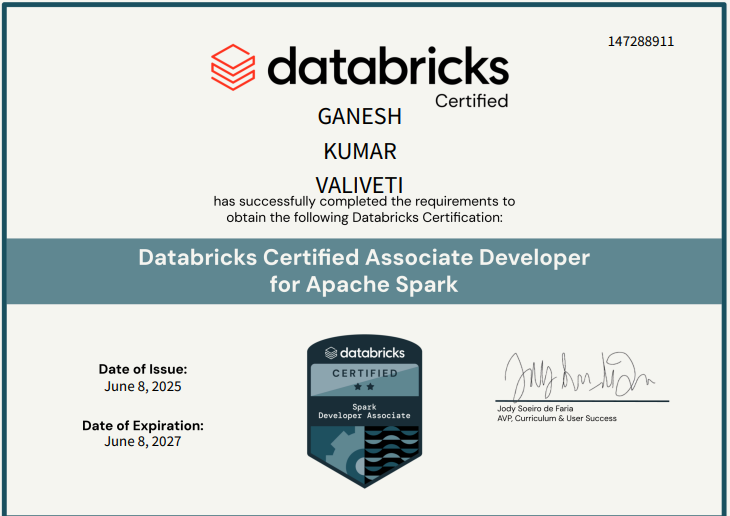

Databricks Certified Associate Developer for Apache Spark

Verify

AWS Certified Developer - Associate

VerifyHonors & Awards

Extra Miler Award

Innova Solutions · Aug 2020

Star of the Month Award

Tata Consultancy Services · Jun 2018